GVRS: A Fast, File-Backed API for Managing Raster Data

Introduction

The Gridfour Virtual Raster Store (GVRS) is a file-backed software module that helps Java applications manage raster (grid) data in situations where the size of the data exceeds what could reasonably be kept in memory. To do so, it provides utilities that allow an application to seamlessly swap blocks of the grid between memory and data files. The GVRS library and file format also provide a way of storing raster data in files that can be shared between applications or used across multiple application runs. And, in cases where storage space or transmission bandwidth is limited, GVRS provides built-in data compression that can reduce storage requirements by at least a factor of four.

This article gives an introduction to the Java API for GVRS. It covers some of the basic ideas of the API and offers examples of how it may be used. If you would like to use GVRS in your own applications, the Gridfour Project Wiki provides a "Jump Start" guide as well as other articles on both the GVRS API and the Gridfour software library in general. The open-source software for the API is available from the Gridfour Software Project.

Sample Data

An article that describes an API that manipulates and stores raster data would surely benefit from have a good source of data to use as an example. We are fortunate to have two: ETOPO1 and GEBCO_2019. These products give world-wide Earth surface elevations and ocean depth (bathymetry) values in a regular geographic coordinate grid.

In the ETOPO1 product, grid points are given in a regular spacing of 1 minute of arc (e.g. 60 rows or columns for each degree of latitude or longitude). Thus ETOPO1 includes (360x60)x(180x60) data values, or about 233 million samples. Storing that many numeric values in memory requires a lot of capacity, but is not out-of-reach for a modern computer. The GEBCO product, however, uses a finer resolution based on a grid spacing of 15 seconds of arc (e.g. 240 rows or columns for each degree of latitude or longitude). That spacing results in a data collection containing 3.7 billion samples. And while there are plenty of computers with sufficient memory to hold that much data, it wouldn't leave much room for anything else.

A Tiling Scheme

Fortunately, when dealing with such large data sets, applications seldom need to access the entire collection all at once. So an obvious solution to the problem of memory use is to store the data on a file and load (or store) pieces of it on an as-needed basis. In essence, this approach follows the pattern of a classic virtual-memory management scheme (thus the "virtual" in "Virtual Raster"). The Gridfour API allows applications to access cells in the grid in a seamless manner using an efficient cache mechanism to provide high-speed access to data.

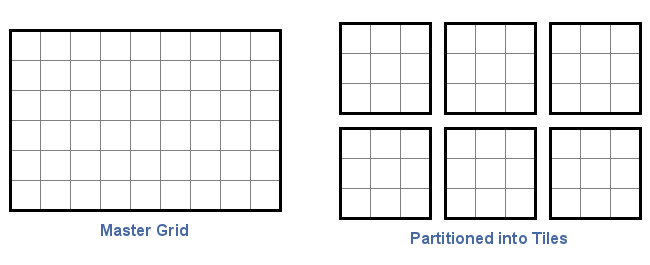

The basic idea of tiling a grid can be seen in the image below. In this case, a grid consisting of six rows and nine columns is divided into six 3-by-3 tiles.

In GVRS, the size of tiles are arbitrary, though all tiles must be of a uniform size. Applications are free to specify tile sizes according to their needs. In this case, a 2-by-9 tile would have worked just fine. In fact, a 4-by-5 tile size would also work, even though the tiles would not evenly divide the 6-by-9 master grid. The GVRS API handles any extra cells internally and their management is transparent to the application.

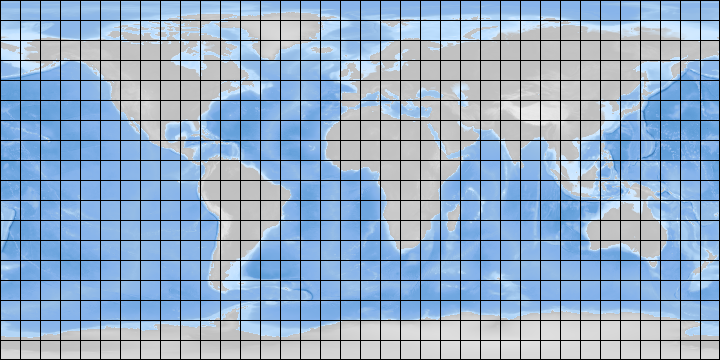

The figure below illustrates how a tiling scheme works. A collection of surface elevation and bathymetry data could be divided into regular tiles ten-degrees across. An application requiring access to information in Europe would load the relevant tiles without needing to access information from South America.

As part of the Gridfour demo module, we've included an application called PackageData that can be used to store the ETOPO1 and GEBCO_2019 sample data sets in the GVRS format. In the case of the ETOPO1 data set, the PackageData demonstration application specifies a grid of 120 rows by 180 columns. In terms of geographic coordinates, the product's cell spacing 1-minute-of-arc, or 1/60th of a degree. So a tile size spanning 120 rows and 180 columns covers an area that's 2 degrees of latitude in height and 3 degrees of longitude in width. This size was chosen after some experimentation because it gives a good data compression results and provides a convenient size for access.

Tiling is a core feature of the GVRS API and deeply involved in all its operations. In fact, even a small grid is treated as being tiled, though it is completely reasonable to specify a tile size that matches that of the entire grid.

A few other details about GVRS tiling are worth noting:

- Tiles can be written or read in any order.

- Not all tiles in the specification need to be populated with data. The overhead for empty tiles is small.

- If the optional GVRS data compression logic is applied, it treats each tile as a separate block of data that is compressed individually, without reference to other tiles. This approach allows tiles to be written or read independently according to the needs of the calling application

- A key feature of the GVRS file-back API is that it maintains an in-memory cache to store tiles for rapid access. This cache works to minimize file-access operations.

Creating a GVRS File

The GVRS library is a tool for both creating raster files and accessing them. Writing a GVRS file is a 3 step process:

- A specification is created giving the grid and tile size parameters. Other metadata may be specified as needed.

- The grid specification and a file-path specification are used to create a new file for writing data. The initial file is treated as an empty collection of tiles and will typically be smaller than 1 kilobyte in size.

- Values are added to the file one grid-point at a time. The internal bookkeeping and management of tiles is mostly transparent to the calling application.

The Gridfour Project Wiki includes a series of articles on reading and writing data to the Gridfour Virtual Raster Store. You can find these at GVRS Jump Start 01: Reading GVRS Files" and GVRS Jump Start 03: Storing Data. You can also study the PackageData.java demonstration application and other example applications in the Gridfour demo module.

What if you don't want to keep a GVRS file after you're done with it?

While GVRS needs a file to support its virtual raster operations, applications do not have to store the file after they are finished with it. The main GvrsFile API provides a constructor that allows an application tell GVRS to use a temporary file. Temporary files are removed from the file system when the application closes the file. See the GVRS Javadoc for more information.Data Compression

Data compression is an interesting topic and is discussed in more detail elsewhere in these notes (see Gridfour Raster Data Compression Algorithms and Lossless Compression for Floating Point Data). Here, we simply note it's impact on the storage for ETOPO1 and GEBCO_2019 data. In its native, uncompressed form, ETOPO1 data is stored as a 4-byte integer. GEBCO_2019 is stored as a 4-byte float. Both of these would require 32 bits per sample if they were stored without data compression. The Gridfour library provides lossless data compression for both integer and float formats. Because integer forms tend to compress better than floating-point data, GEBCO data compresses better if it is converted to an integer form. In the table below, data for GEBCO is given for both its native floating point format and as scaled integer. GEBCO x 1 is rounded to the nearest meter, GEBCO x 2 to the nearest half meter. The larger scaling factor increases the amount of information to be stored in the output file, and thus the bits-per-sample values increases.

| Product | Size (bits/sample) | Number of Samples | Time to Process (sec) |

|---|---|---|---|

| ETOPO1 | 3.645 | 233,280,000 | 64.0 |

| GEBCO (float) | 15.354 | 3,732,480,000 | 765.2 |

| GEBCO x 1 | 2.605 | 3,732,480,000 | 1210.6 |

| GEBCO x 2 | 3.1961 | 233,280,000 | 1233.0 |

Earlier, we mentioned that the storage time for the uncompressed version of ETOPO1 was 8.6 seconds. As the values in the table show, compressing the data requires more processing and increases the time to process to 64.0 seconds. However, most data compression techniques in use today require far less processing to decompress data than to compress it. This generalization is also true for all the currently implemented Gridfour data compression utilities. While it took over a minute to compress the ETOPO1 data, the time required to read the entire 233 million points in the compressed data set was only 6.3 seconds.

Even though the integer-coded GEBCO x 1 and the ETOPO1 products represent elevations with the same precision (as integral meters), the bits per-sample-value for GEBCO x 1 is lower than that for ETOPO1. This difference occurs because the sample points in the GEBCO grid are spaced closer together than those for ETOPO1 (15 seconds of arc versus 1 minute of arc). With the closer spacing, there tends to be less variation from sample to sample, which leads to an improved predictability in the data. With improved predictability, better data compression ratios can be attained.

Adding Supplemental Content with Metadata Records

In order to maintain a simple design, the GVRS API presents a deliberately minimal feature set. Even so, we recognize that many users have application-specific requirements for the product. In some cases, users may wish to attach supplemental data to a GVRS file. This requirement can be met through the use of the Metadata Record feature.

Metadata Records are blocks of text or binary data that can be stored as part of a GVRS file. The GVRS API treats these elements as opaque and does not perform any operation related to their content. These elements may be stored or retrieved using identification tags provided by the application.

The ETOPO1 and GEBCO products provide an example of how this feature may be used. Both data sets are based on geophysical information and, naturally, there are industry standards for specifying metadata related to their content. Because GVRS is intended to be a general-purpose utility, adding direct support for such standards is outside its scope. However, the PackageData demonstration application implements code for storing relevant metadata in a GVRS file.

The metadata bundled with the demonstration code is based on the Well-Known Text (WKT) standard which is supported by many Geographic Information Systems. Well-Known Text files provide information about coordinate systems (map projections), units of measure, and specifications for Earth's size and shape ("datums"), and other information needed to accurately represent the data on a map. While neither ETOPO1 nor GEBCO supply WKT files in their distributions, both include relevant specifications on their product web sites. For the demo, we used the information to create a file called GlobalMSL.prj that contains metadata in WKT format.

For more information about using GVRS metadata, please see our Wiki article GVRS Jump Start 05: Metadata

Conclusion

The information given in this article should be enough to get you started using GVRS. The use of many of the functions described above is demonstrated in the PackageData.java application included in the Gridfour software distribution.

The GVRS API is still under development. While it has undergone quite a bit of testing, it has seen very little actual use. If you encounter issues using GVRS, please let us know. Also, if you identify new features or enhancements you would like added to the API, we welcome your suggestions.

References

Adobe Systems Inc., 1992.TIFF Revision 6.0, Final-June 3, 1992. Accessed December 2019 from https://www.itu.int/itudoc/itu-t/com16/tiff-fx/docs/tiff6.pdf

General Bathymetric Chart of the Oceans [GEBCO], 2019.GEBCO Gridded Bathymetry Data. Accessed December 2019 from https://www.gebco.net/data_and_products/gridded_bathymetry_data

National Oceanographic and Atmospheric Administration [NOAA], 2019. ETOPO1 Global Relief Model. Accessed December 2019 from https://www.ngdc.noaa.gov/mgg/global/

Sonalysts, Inc., 2019.wXstation. Accessed December 2019 from http://www.sonalysts.com/products/wxstation/

University Corporation for Atmospheric Research [UCAR], 2019.NetCDF-Java Library Accessed December 2019 from https://www.unidata.ucar.edu/software/netcdf-java/current/